MuseMorphose, A Transformer-based VAE

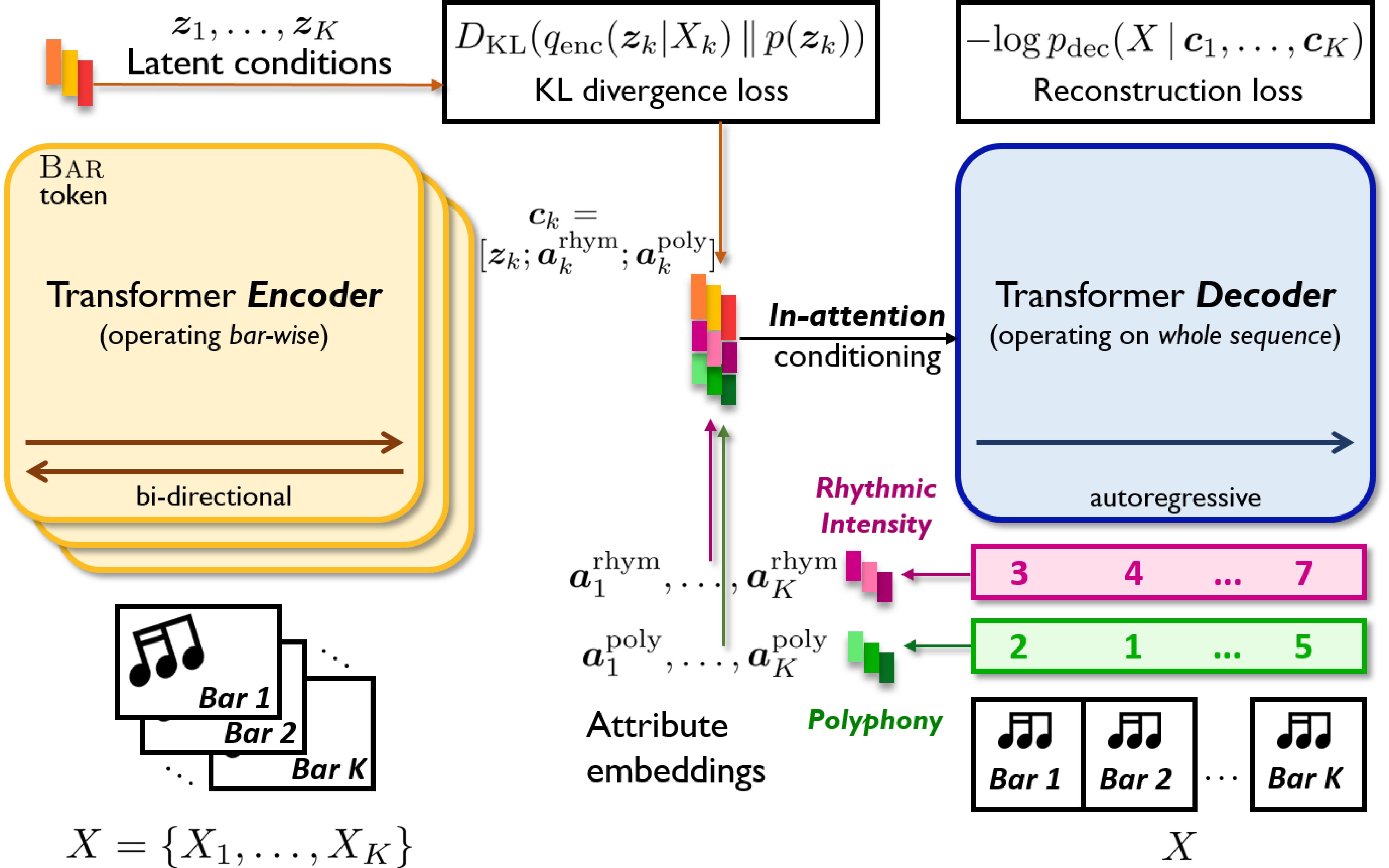

- We develop the in-attention mechanism to firmly control Transformer decoders with segment-level, dense conditions.

- We then bridge the full song-level in-attention decoder and a bar-wise Transformer encoder to construct our MuseMorphose model.

- Trained with the VAE objective alone, MuseMorphose can perform style transfer on long musical pieces, while allowing users to control musical attributes down to the bar level.

Paper

- Shih-Lun Wu, Yi-Hsuan Yang

MuseMorphose: Full-Song and Fine-Grained Piano Music Style Transfer with One Transformer VAE

accepted to IEEE/ACM Trans. Audio, Speech, & Language Processing (TASLP), Dec 2022

[paper] [supplement] [code] [BibTex]

Brief Technical Overview

MuseMorphose is trained on expressive pop piano performances (AILabs.tw-Pop1.7K dataset, link). A slightly revised Revamped MIDI representation (REMI, Huang and Yang, 2020, paper) is adopted to convert the music into token sequences.

Controllable Attributes

We consider the following two computable, bar-level attributes:

- Rhythmic intensity: The percentage of quarter beats with ≥1 note onsets.

- Polyphony: The average number of notes hit or held on each quarter beat.

Following Kawai et al. (2020) (paper), for each attribute, we assign each bar an ordinal class from \([0, 1, \dots, 7]\) according to the computed raw score. The attributes are turned into learnable attribute embeddings

\[\boldsymbol{a}^{\text{rhym}}, \boldsymbol{a}^{\text{poly}} \in \mathbb{R}^{d_{\boldsymbol{a}}}\]fed to the decoder through in-attention to control the generation.

We note that more attributes can be potentially included, such as rhythmic variation (ordinal), or composing styles (nominal), just to name a few.

In-attention Conditioning

To maximize the influence of bar-level conditions (i.e., \(\boldsymbol{c}_k\)’s) on the decoder, we inject them into all \(L\) self-attention layers via vector summation:

\[\begin{alignat}{2} \tilde{\boldsymbol{h}^l_t} &= \boldsymbol{h}^l_t + {\boldsymbol{c}_k}^{\top} W_{\text{in}} \,, \; \; \; \; &\forall \, l \in \{0, \dots, L-1\} \; \text{and} \; \forall \, t \in I_k \, \\ \boldsymbol{c}_k &= \text{concat}([\boldsymbol{z}_k, \boldsymbol{a}^{\text{rhym}}_k, \boldsymbol{a}^{\text{poly}}_k])\, , &\forall \, k \in \{1, \dots, K\} \, , \end{alignat}\]where \(W_{\text{in}}\) is a learnable projection, \(I_k\) stores the timestep indices for the \(k^{\text{th}}\) bar, and \(\tilde{\boldsymbol{h}^l_t}\)’s are the modified hidden states of layer \(l\).

This mechanism promotes tight control by constantly reminding the model of the conditions’ presence.

Listening Samples

The samples demonstrate that MuseMorphose attains high fidelity to the original song, strong attribute control, good diversity across generations, and excellent musicality, all at the same time.

8-bar Excerpt #1

| • Original (mid rhythm & polyphony) | |

|---|---|

| • Generation #1, high rhythm & polyphony | |

| • Generation #2, ascending rhythm & polyphony | |

| • Generation #3, descending rhythm & polyphony |

8-bar Excerpt #2

| • Original (mid rhythm & polyphony) | |

|---|---|

| • Generation #1, low rhythm & polyphony | |

| • Generation #2, high rhythm, ascending polyphony | |

| • Generation #3, descending rhythm, high polyphony |

8-bar Excerpt #3 (Mozart’s “Ah vous dirai-je, Maman”)

| • Original (theme melody only) | |

|---|---|

| • Generation #1, ascending rhythm & polyphony |

Full Song #1

| • Original (121 bars) | |

|---|---|

| • Generation, increased rhythm & polyphony |

Full Song #2

| • Original (87 bars) | |

|---|---|

| • Generation, increased rhythm, decreased polyphony |

Baseline Samples

Below we provide some compositions by RNN-based models

MIDI-VAE (Brunner et al., 2018)

| • Original #1 (mid rhythm & polyphony) | |

|---|---|

| • Generation #1, low rhythm & polyphony | |

| • Original #2 (mid rhythm & polyphony) | |

| • Generation #2, high rhythm & polyphony |

Attributes-aware VAE (Kawai et al., 2020)

| • Original #1 (mid rhythm & polyphony) | |

|---|---|

| • Generation #1, low rhythm & polyphony | |

| • Original #2 (mid rhythm & polyphony) | |

| • Generation #2, high rhythm & polyphony |

Authors and Affiliations

- Shih-Lun Wu

Research Intern @ Taiwan AI Labs / Senior CS Major Undergrad @ National Taiwan University

b06902080@csie.ntu.edu.tw

[website] - Yi-Hsuan Yang

Chief Music Scientist @ Taiwan AI Labs / Associate Research Fellow @ Academia Sinica

affige@gmail.com, yhyang@ailabs.tw

[website]